Section: New Results

Motion-planning in human-populated environment

We explore motion planning algorithms to allow robots/vehicles to navigate in human populated environment, and to predict human motions. Since 2016, our work focuses on two directions, which are prediction of pedestrian behaviors in urban environments and mapping of human flows. We also started to investigate the navigation of a telepresence robot in collaboration with the GIPSA Lab. These works are presented here after.

|

Urban Behavioral Modeling

Participants : Pavan Vasishta, Anne Spalanzani, Dominique Vaufreydaz.

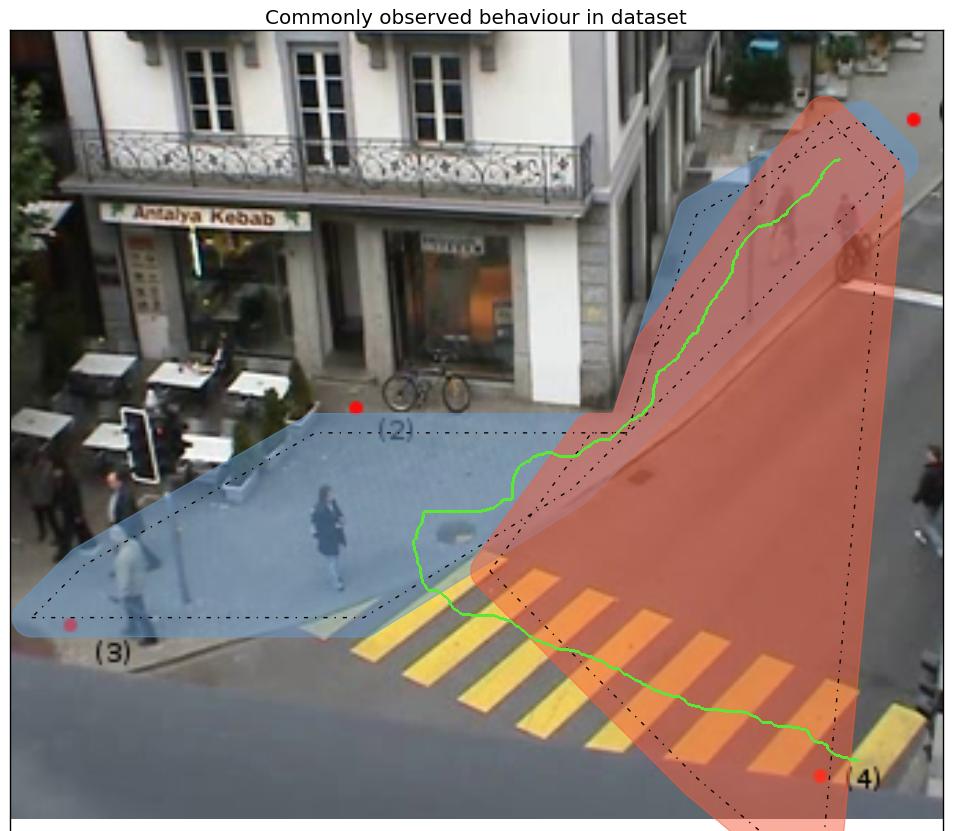

The objective of modeling urban behavior is to predict the trajectories of pedestrians in towns and around car or platoons (PhD work of P. Vasishta). In 2017 we proposed to model pedestrian behaviour in urban scenes by combining the principles of urban planning and the sociological concept of Natural Vision. This model assumes that the environment perceived by pedestrians is composed of multiple potential fields that influence their behaviour. These fields are derived from static scene elements like side-walks, cross-walks, buildings, shops entrances and dynamic obstacles like cars and buses for instance. This work was published in [30], [27]. Next year will be dedicated to combine this model with GHMM (Growing HMM) [86] to infer probable pedestrian paths in the scene to predict, for example, legal and illegal crossings, see. Fig. 13.

Learning task-based motion planning

Participants : Christian Wolf, Jilles Dibangoye, Laetitia Matignon, Olivier Simonin.

Our goal is the automatic learning of robot navigation in human populated environments based on specific tasks and from visual input. The robot automatically navigates in the environment in order to solve a specific problem, which can be posed explicitly and be encoded in the algorithm (e.g. recognize the current activities of all the actors in this environment) or which can be given in an encoded form as additional input. Addressing these problems requires competences in computer vision, machine learning, and robotics (navigation and paths planning).

We started this work in the end of 2017, following the arrival of C. Wolf, through combinations of reinforcement learning and deep learning. The underlying scientific challenge here is to automatic learn representations which allow the agent to solve multiple sub problems require for the task. In particular, the robot needs to learn a metric representation (a map) of its environment based from a sequence of ego-centric observations. Secondly, to solve the problem, it needs to create a representation which encodes the history of ego-centric observations which are relevant to the recognition problem. Both representations need to be connected, in order for the robot to learn to navigate to solve the problem. Learning these representations from limited information is a challenging goal.

|

Modeling human-flows from robot(s) perception

Participants : Jacques Saraydaryan, Fabrice Jumel, Olivier Simonin.

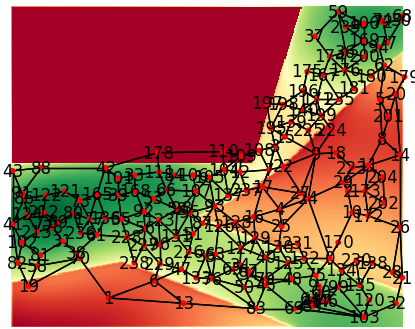

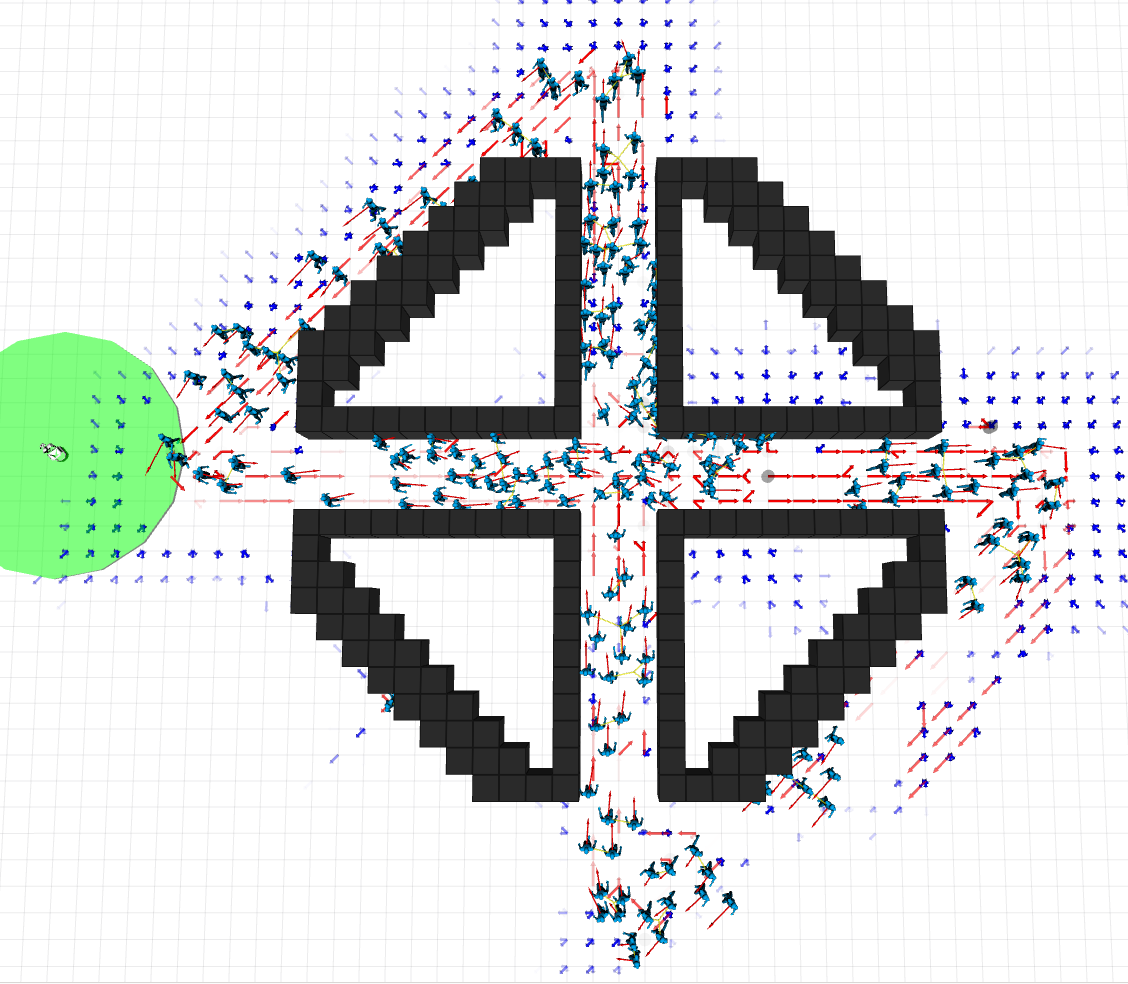

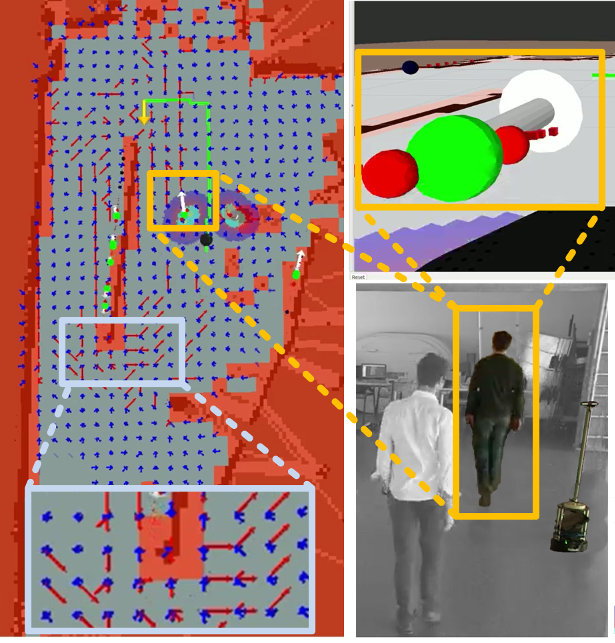

In order to deal with robot navigation in dense human populated environments, eg. in flows of humans, we investigate the problem of mapping these flows. The challenge is to build such a map from robot perceptions while robots move autonomously to perform some tasks in the environment. We developped statistical learning approach (ie. a counting-based grid model) which computes in each cell the likelihoods of crossing a human in each possible direction, see red vectors in Fig. 14.a We extended the flow grid model with a human motion predictive model based on the Von Misses motion pattern, allowing to "accelerate" the flow grid mapping, see blue vectors in Fig. 14.a.

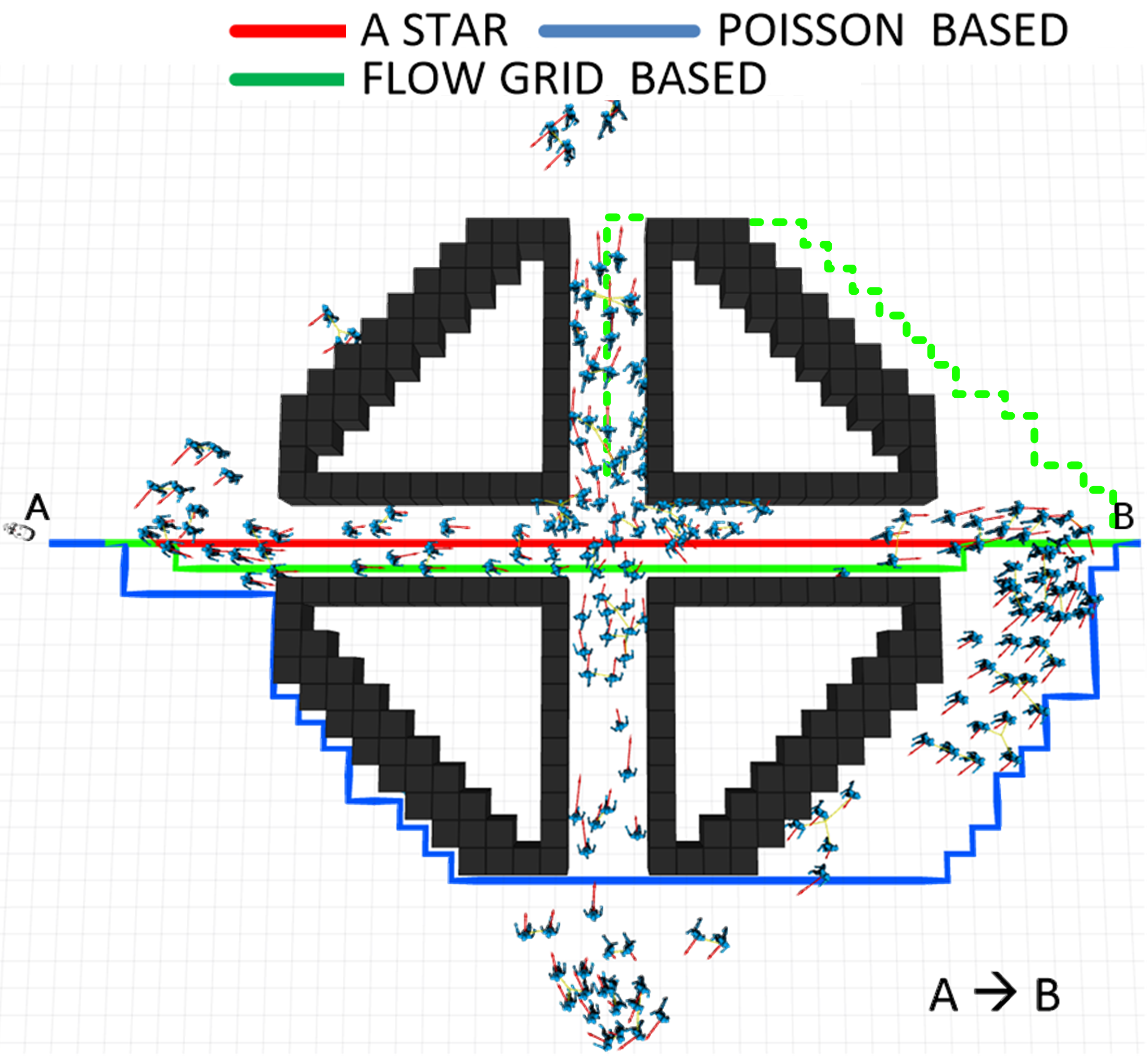

Then we examined how path-planning can benefit of such a flow grid, that is taking into account the risk for a robot to encounter humans in opposite direction. We first implemented the Flow-Grid model in a simulator built upon PedSim and ROS tools, allowing to simulate mobile robots and crowd of pedestrians. We compared three A*-based path-planning models using different levels of information about human presence: non-informed, a grid of human presence likelihood proposed by Tipaldi [83] and our grid of human motion likelihood (see 14.b). Experiments in simulations and with real robots allowed to show the efficiency of the flow-grid to build efficient paths through human flows (see 14.c). These results have been published in ECRM [16].

This work will allow us to develop new solutions to the patrolling of moving people, that we called the waiters problem two years ago (see our article in RIA revue, 2017 [11]). Indeed, if robots build a flow grid of people they cross and have to serve, they will be able to optimize along the time their strategy to deploy and revisit people regularly.

Navigation of telepresence robots

Participants : Rémi Cambuzat, Olivier Simonin, Anne Spalanzani, Gerard Bailly [GIPSA, CNRS, Grenoble] , Frederic Elisei [GIPSA, CNRS, Grenoble] .

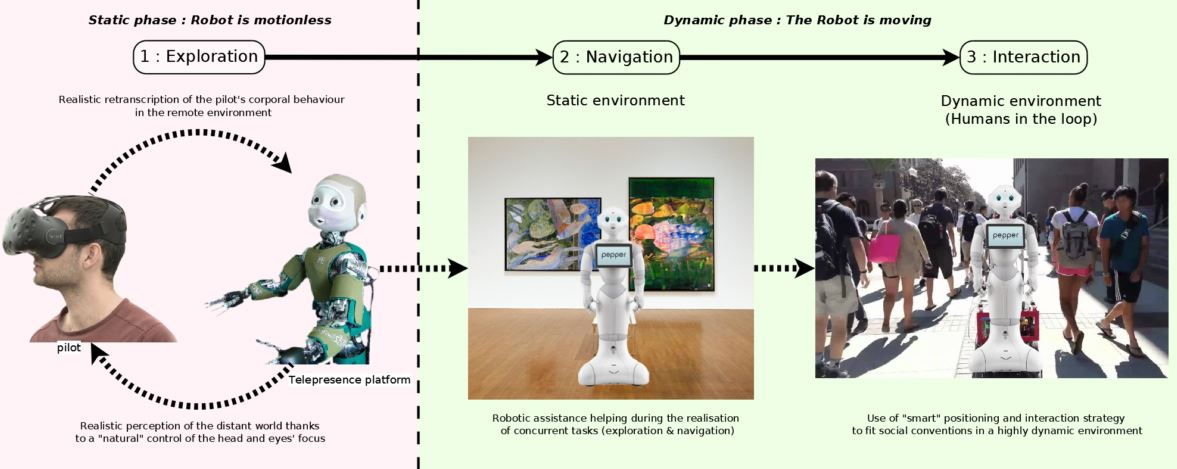

In 2016 we obtained with the team of Gérard Bailly, from GIPSA/CNRS Grenoble, a regional support for the TENSIVE project. It funds the PhD thesis of Remi Cambuzat on immersive teleoperation of telepresence robots for verbal interaction and social navigation, started in October 2016. We have 2 mains objectives: i) to design a new generation of immersive control platforms for telepresence robots and ii) to teach multimodal behaviors to social robots by demonstration. In both cases, a human pilot interacts with remote interlocutors via the mediation of a robotic embodiment that should faithfully reproduce the body movements of the pilots while providing rich sensory and proprioceptive feedback. During social interactions, people's eyes convey a wealth of information about their direction of attention and their emotional and mental states. Endowing telepresence robots with the ability to mimic the pilot's gaze direction as well as autonomous social robots with the possibility to generate gaze cues is necessary for enabling them to seamlessly interact with humans. During the first year of the PhD thesis, we focused on the immersive teleoperation of the Nina Robot Gaze 15. Figure 15 abstracts the proposed methodology.